This article was originally published on Botium’s blog on February 9, 2021, prior to Cyara’s acquisition of Botium. Learn more about Cyara + Botium

A quick summary of 7 important DOs and DON’Ts when designing a chatbot testing strategy. We are continuously seeing teams ignoring those actually rather simple rules.

DOs and DON’Ts

DO plan for iterations

DO plan for iterations

In german we say rome was not built in a day — the same applies for your chatbot training data. A robust chatbot is built by multiple iterations, training and testing cycles, and by ongoing monitoring and performance tuning: CODE, TEST, DEPLOY, REPEAT

DON’T underestimate the need for constant performance measurement

DON’T underestimate the need for constant performance measurement

Without measuring performance with real user conversations, you will never know if your chatbot is really working for your users.

DO apply the 80/20 rule for testing utterances

DO apply the 80/20 rule for testing utterances

Most teams are tempted to use 100% of the available data for training. Do not do this. You won’t know if your training data works if you use parts of the training data additionally for testing. The rule of thumb is to use 80% of data for training and 20% for testing.

If and only if the amount of available data is very small you may try K-Fold validation to get some insights about the quality of your data.

DON’T rely on smoke tests or happy path tests

DON’T rely on smoke tests or happy path tests

Again the 80/20 rule — 20% is the work spent for the comfort zone, and 80% of the work is testing and bug fixing. 20% of your users will follow the happy path, and 80% will break out. Prepare for this.

DO spend a reasonable amount of time with explorative testing

DO spend a reasonable amount of time with explorative testing

Automated regression testing is superior for finding defects that you know can happen. It won’t help to find defects you don’t know about. Spend some time with explorative (=manual) testing: try to bring your chatbot to its limits and beyond.

DON’T ignore the need to re-test after training

DON’T ignore the need to re-test after training

You can never know what effect adding some training data on one end of your fine-tuned NLU model will have on the other end, until you try it out. Do a full regression test of your NLU model every single time you make changes.

DO test processing of out of order messages

DO test processing of out of order messages

One of the most human-like behaviors is to scroll up the conversation history in the chatbot window and resume from a previous step. Most chatbots out there will fail this challenge if not prepared accordingly.

Action Plan

Here are suggestions to address the DOs and DON’Ts.

Establish Continuous Testing Mindset

Testing is a crucial part of the development process. There is no such thing as a single testing phase when bringing a chatbot to life. Testing has to be part of the team’s daily business, just like coding, design, and monitoring.

Holistic Testing

For chatbots as for software products in general, there are more than unit tests coded by the programmers.

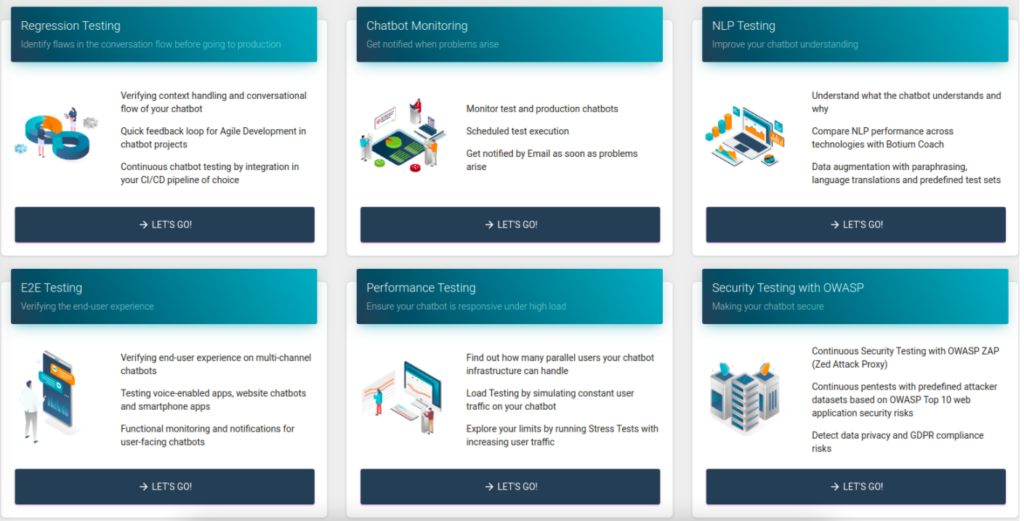

- Regression Testing on API level — Identify flaws in the conversation flow before going to production

- NLP Testing — Improve your chatbot understanding

- E2E Testing — Verifying the end-user experience

- Voice Testing — Understand your users on voice channels

- Performance Testing — Ensure your chatbot is responsive under high load

- Security Testing — Making your chatbot secure

- Monitoring — Get notified when problems arise

Get the Right Tools In Place

Without the right tools, you will be lost. With Botium you are prepared for the challenges of getting them in place and integrating them into your chatbot development lifecycle.