This article discusses security threats and attack vectors of typical chatbot architectures — based on OWASP Top 10 and adversarial attacks. It was originally published on Botium’s blog on September 30, 2021, prior to Cyara’s acquisition of Botium. Learn more about Cyara + Botium

Chatbot Security Threats

The well-known OWASP Top 10 is a list of top security threats for a web application. Most chatbots out there are available over a public web frontend, and as such all the OWASP security risks apply to those chatbot frontends as well. Out of these risks there are two especially important to defend against, as in contrary to the other risks, those two are nearly always a serious threat when talking about chatbots — XSS and SQL Injection.

Recently another kind of security threat came up, specifically targeting NLP models — so-called “adversarial attacks.”

Cross-Site Scripting – XSS

A typical implementation of a chatbot frontend:

- There is a chat window with an input box

- Everything the user enters in the input box is mirrored in the chat window

- Chatbot response is shown in the chat window

The XSS vulnerability is in the second step — when entering text including malicious Javascript code, the XSS attack is fulfilled with the chatbot frontend running the injected code:

<script>alert(document.cookie)</script>

This vulnerability is easy to defend by validating and sanitizing user input, but even companies like IBM published vulnerable code on Github still available now or only fixed recently.

Possible Chatbot Attack Vector

For exploiting an XSS vulnerability the attacker has to trick the victim to send malicious input text.

- An attacker tricks the victim to click a hyperlink pointing to the chatbot frontend including some malicious code in the hyperlink

- The malicious code is injected into the website

- It reads the victim’s cookies and sends them to the attacker without the victim even noticing

- The attacker can use those cookies to get access to the victim’s account on the company website

SQL Injection — SQLI

A typical implementation of a task-oriented chatbot backend:

- The user tells the chatbot some information item

- The chatbot backend queries a data source for this information item

- Based on the result a natural language response is generated and presented to the user

With SQL Injection, the attacker may trick the chatbot backend to consider malicious content as part of the information item:

my order number is "1234; DELETE FROM ORDERS"

Developers typically trust their tokenizers and entity extractors to defend against injection attacks.

Possible Chatbot Attack Vector

When the attacker has personal access to the chatbot frontend, an SQL injection is exploitable directly by the attacker (see example above), doing all kinds of SQL (or no-SQL) queries.

Adversarial Attack

This is a new type of attack specifically targeting classifiers — the NLP model backing a chatbot is basically a text classifier.

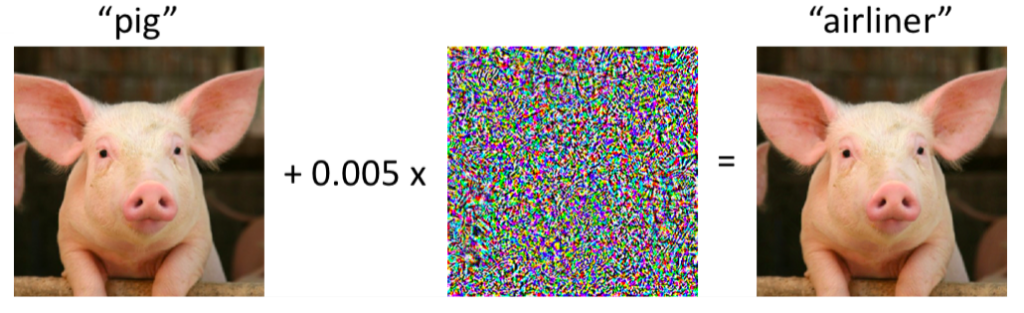

An adversarial attack tries to identify blind spots in the classifier by applying tiny, in the worst-case invisible changes (noise) to the classifier input data. A famous example is to trick an image classifier into a wrong classification by adding some tiny noise not visible to the human eye.

A more dangerous real-life attack is to trick an autonomous car to ignore a stop sign by adding some stickers to it.

The same concept can be applied to voice apps — some background noise not noticed by human listeners could trigger IoT devices in the same room to unlock the front door or place online shop orders.

When talking about text-based chatbots, the only difference is that it is not possible to totally hide added noise from the human eye, as noise, in this case, means changing single characters or whole words.

There is an awesome article “What are adversarial examples in NLP?“ from the TextAttack makers available here.

Possible Chatbot Attack Vector

For voice-based chatbots one possible risk is to hand over control to the attacker based on manipulated audio streams, exploiting weaknesses in the speech recognition and classification engine.

- The attacker tricks the victim to play a manipulated audio file from a malicious website

- In the background, the voice device is activated and commands embedded into the audio file are executed

To be honest, it is hard to imagine a real-life security threat for text-based chatbots.

- User Experience is an important success factor for a chatbot. An NLP model not robust enough to handle typical human typing habits with typographic errors, character swapping, and emojis provides a bad user experience, even without any malicious attacker involved.

- It could be possible to trick a banking chatbot into doing transactions with some hidden commands and at the same time deny that the transaction was wanted, based on the chatbot logs … (I know, not that plausible …)

Security and Penetration Testing with Botium

Botium includes several tools for improving the robustness of your chatbot and your NLP model against the attacks above.

Penetration Testing with OWASP ZAP Zed Attack Proxy

Botium provides a unique way of running continuous security tests based on the OWASP ZAP Zed Attack Proxy — read more in the Botium Wiki. It helps to identify security vulnerabilities in the infrastructure, such as SSL issues and outdated 3rd-party-components.

E2E Test Sets for SQL Injection and XSS

Botium includes test sets for running End-2-End-Security-Tests on device cloud and browser farms, based on OWASP recommendations:

- Over 70 different XSS scenarios

- More than 10 different SQL Injection scenarios

- Exceptional cases like character encoding, emoji flooding, and more

Humanification Testing

The Botium Humanification Layer checks your NLP model for robustness against adversarial attacks and common human typing behavior:

- simulating typing speed

- common typographic errors based on keyboard layout

- punctuation marks

- and more …

Paraphrasing

With the paraphraser it is possible to increase test coverage with a single mouse click — read on here.

Load Testing

With Botium Load Testing and Stress Testing you can simulate user load on your chatbot and see how it behaves under production load — read on in the Botium Wiki.