This article was originally published on Botium’s blog on May 18, 2021, prior to Cyara’s acquisition of Botium. Learn more about Cyara + Botium

Quite often we are consulted to design a robust test strategy for a mission-critical enterprise chatbot. How is it possible to test something for all possible unexpected user behaviour in the future? How can someone confidently make assumptions on the quality if we have no clue what the users will ask the chatbot?

Short-Tail vs Long-Tail Topics

While we do not own a magic crystal ball to look into future usage scenarios, from our experience we gained the best results with a systematic approach in a continuous feedback setup. In almost every chatbot project the use cases can be categorized:

- short-tail topics — the topics serving most of your users’ needs (typically, the 90/10 rule applies — 90 % of your users are asking for only 10% of the topics)

- long-tail topics — all the other somehow exotic topics that the chatbot could also answer

- handover topics — topics where for whatever reason handover to a human agent is required

Examples for short-tail topics in the telecom domain:

- Opening hours of the flagship stores

- WLAN connectivity issues

- Questions on individual invoice lines

Examples for long-tail topics in the telecom domain:

- iPhone availability in the flagship stores

- International roaming conditions

- Lost SIM-card phone

Examples for handover topics in the telecom domain:

- Contract cancellation

- Business products and business customers

Getting Started

Our recommendations for the first steps in a chatbot project are always the same:

- Focus training and testing exclusively on the short-tail topics — typically, with a customer support chatbot, which is the majority of the chatbots we’ve been working with, there are a handful of topics only for which you have to provide a good test coverage

- Apart from that, leave the long-tail topics aside and design a clear human handover process

The challenge now is how to get good test coverage for the short-tail topics — this is the real hard work in a chatbot project. I once wrote a blog post about how to gather training data for a chatbot.

Continuous Feedback

As soon as the chatbot is live, there is constant re-training required — this process involves manual work to evaluate real user conversations that for some reason went wrong and to deduct the required training steps. During this process, the test coverage will be increased — for the short-tail topics we can expect a near 100% test coverage within several weeks after launch: those are the topics asked over and over again, and as complex as human language may be, there is only a finite number of options how to express intent in a reasonable short way.

As opposed to the title “Testing Chatbots for the Unexpected”, you can see that we suggest a very down-to-earth-approach — not testing for the unexpected or the unknown, but testing for “the most likely options” and trying to get a high test coverage for those cases (this is possible as human language is complex but finite).

Automated Testing

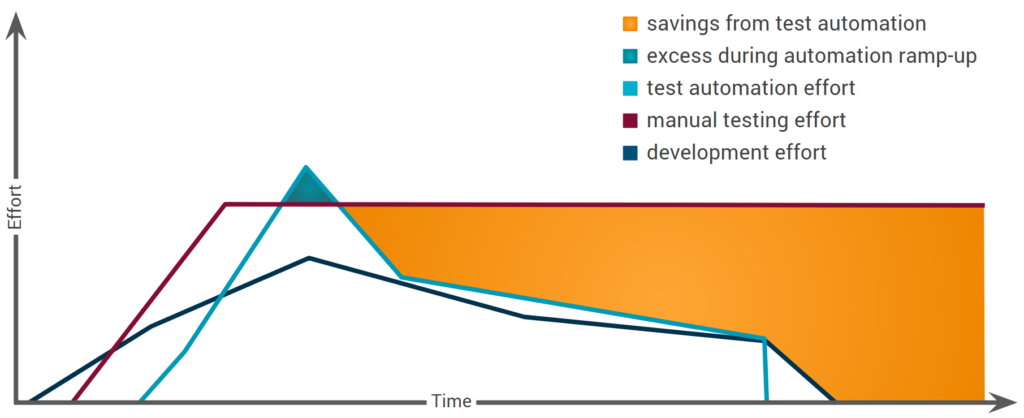

When it comes to test automation, the critical question is if something is worth investing in the initial automation effort.

- Establishing automated testing for the short-tail topics is a must

- Ensure a smooth handover process to a human agent

- Long-tail topics can be handled with manual testing in the beginning

Getting the Tools

Without proper tools, you will be lost. Botium helps you in your path to successful chatbot testing.